Improving the quality of CT images with AI and Deep Learning

In the recently concluded Innosuisse project DIR3CT, researchers from ZHAW’s Centre for AI (CAI) could improve the quality of CT images with the help of AI and Deep Learning. The results of the project, which was carried out in collaboration with Varian Medical Systems, as well as with ZHAW’s Institute for Applied Mathematics and Physics (IAMP), could improve radiation therapy applied to patients with cancer.

Cone beam computed tomography (CBCT) is widely used in clinical radiation therapy to quickly acquire volumetric (3D) images of the patient’s anatomy during treatment, e.g. for the correct positioning of the patient. On-board CBCT devices suffer however from reduced image quality compared with diagnostic CT scans, as well as from artefacts induced by patient motion (e.g., due to breathing, heartbeat, muscle relaxation or digestion).

Varian Medical Systems (now a Siemens Healthineers company), a world market leader in radiation therapy, teamed up with researchers from two ZHAW institutes, the Centre for AI (CAI) and the Institute for Applied Mathematics and Physics (IAMP), in order to reduce the motion artefacts and thus improve the CBCT image quality with Artificial Intelligence (AI) and Deep Learning.

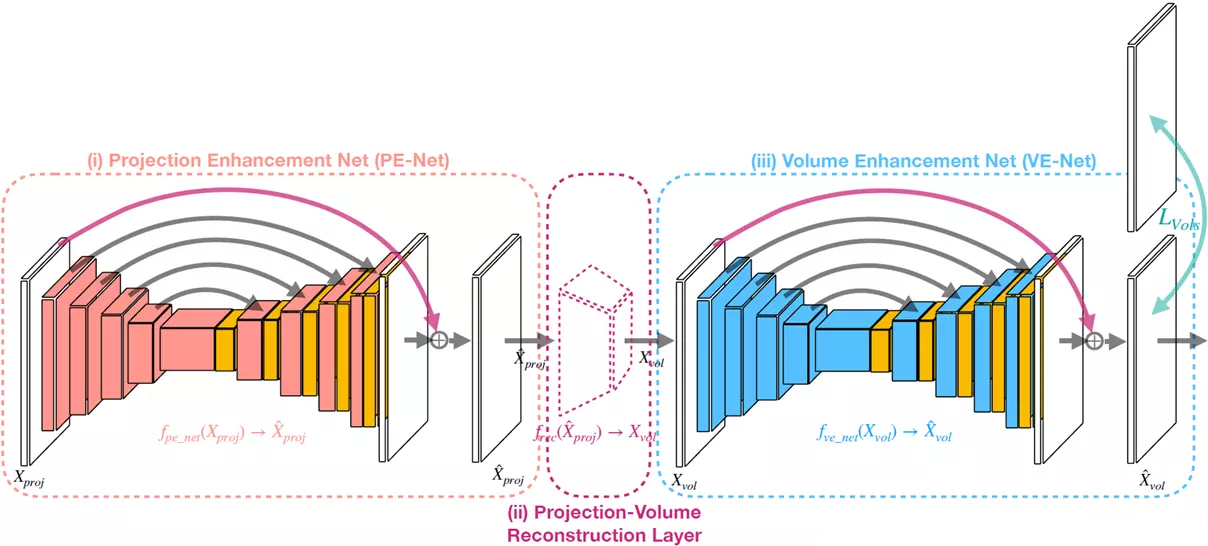

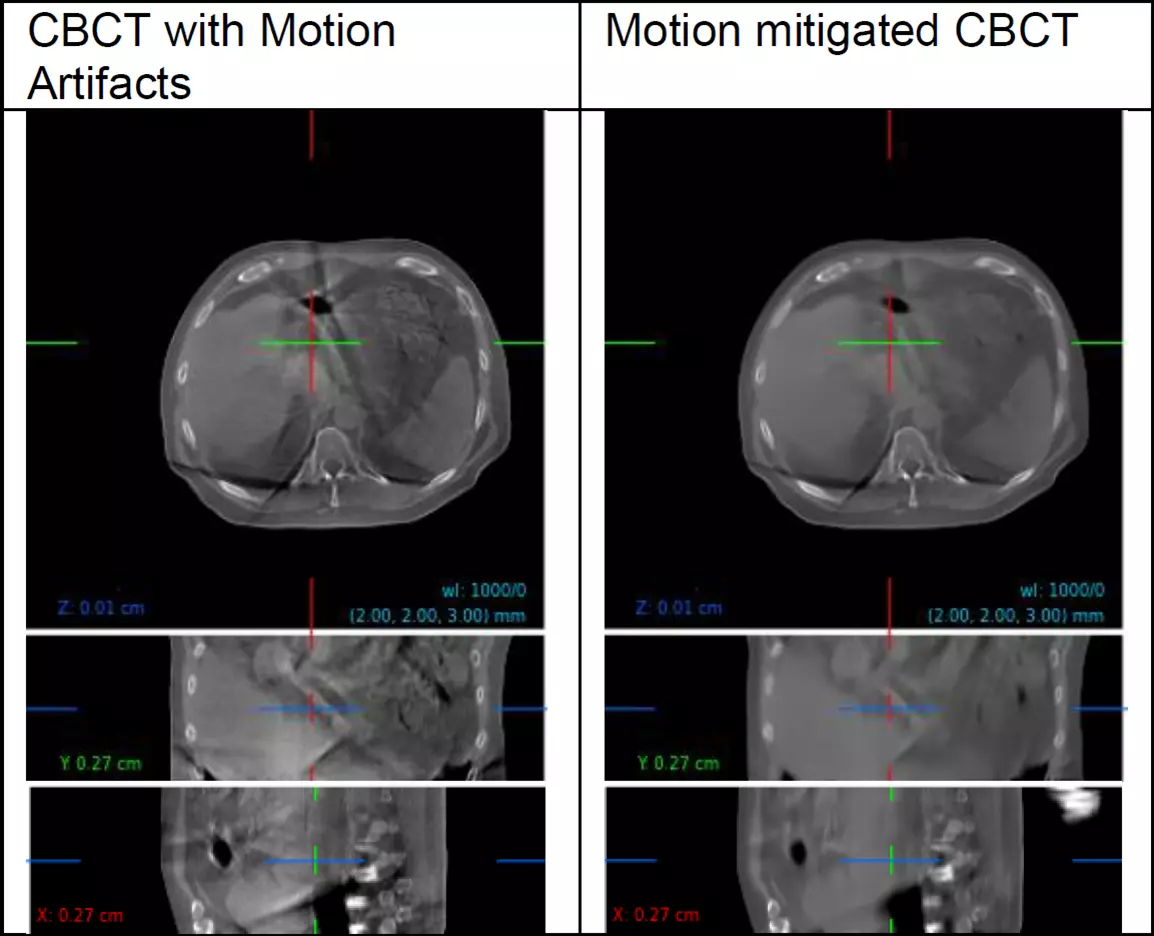

In the project DIR3CT (funded by Innosuisse), a motion mitigation solution was developed, in which deep Neural Networks are trained to correct the artifacts. The developed “dual-domain” approach can significantly improve CBCT image quality, while operating on both the 2D X-ray projections, as well as the reconstructed 3D CBCT image. This allows end-to-end training of the model (see left figure), embedded within the CBCT image reconstruction. An example of the original and motion mitigated CBCT is shown in the right figure.

The quantitative evaluation, as well as clinical experts, confirmed the superiority of the motion mitigated CBCT images over the original images. A clinical study is currently underway.

In addition, time resolved 4D-CBCT images were investigated, which require physically plausible concepts to model the anatomical motion. A motion model could be learned, which can use external conditioning data (e.g., a breathing signal) to predict the changes (so-called displacement vector fields) between different motion states in a single forward pass.

In summary, the project could demonstrate that motion mitigated CBCT images are valuable in the clinical workflow, also for advanced applications such as adaptive radiation therapy. The results are being finalized for submission to a peer-reviewed scientific journal and were presented as a poster at the recent AAPM (American Association for Physicists in Medicine) scientific conference in Washington DC.

The research collaboration between Varian and ZHAW continues in the recently started follow-up project AC3T, now also including additional project partners from South Korea.